Learning Sensorimotor Capabilities in Cellular Automata

Learning robust self-organizing creatures with gradient descent

initializing...

Radius 0.5 is good to spawn creatures

Click on screen to spawn

press p to pause and enter to clear

Spatially localized patterns in cellular automata have shown a lot of interesting behavior that led to new understanding of self-organizing system. While the notion of environment is a keypoint in Maturana and Varela biology of cognition, studies on cellular automata rarely introduced a well defined environment in their system. In this paper, we propose to add walls in a cellular automata to study how we can learn a self-organizing creature capable of reacting to the perturbations induced by the environment, ie robust creatures with sensorimotor capabilities. We provide a method based on curriculum learning able to learn the CA rule leading to such creature. The creature obtained, using only local update rules, are able to regenerate and preserve their integrity and structure while dealing with the obstacles in their way.

Introduction

Behavior in living creature is often seen as action output in response to sensory input, these sensorimotor capabilities seem crucial to behave better in their environment and therefore increase their chance of survival. When we think about output from sensory inputs, the first thing we often think of is neural computation. However, we can't deny that a lot of living thing without a brain are also having these sensorimotor capabilities like plant moving to get more sun, slimemolds which seem to use mechanical cues of its environment to choose in which direction to expand , using their body both for sensing and computing the decision. We also find sensorimotor capabilities at the macro scale in swarm of bacterias where a group of bacteria seem to avoid a wall of antibiotics, making group decision for obstacle avoidance.

Furthermore, living behing with neural computation also seem to take advanatge of some morpholocial computation. In fact, morphological computation theory states that the body also plays a role in this sensory to motor flow. ref . Cognition therefore isn't bounded to the brain.

In Maturana and Varela work , cognition is centered around how an agent "reacts" to the perturbation induced by its environment, ie sensory stimulus. More precisely, they introduce the notion of cognitive domain of a self-organizing system which are all the perturbations induced by the environment which do not result in the destruction of the self-organizing system. Their notion of cognition is thus deeply linked with how a self-organizing creature will try to preserve its integrity in its environment.

From their theories, studies have been made to apply those notion to examples of self-organizing systems. One of the main test bed is cellular automata, which consists of a grid of states where only local update are allowed, and yet very complex self organizing pattern can emerge. One famous cellular automata is the game of life. The game of life and especially a moving pattern :the glider, has been studied under their paradigm showing again the richness and complexity of such system. However, even if the glider in the game of life has shown to be a good toy model to explicit those theories with interesting interaction, they're also quite simple entity that are not very robust, with a lot of perturbation leading to destruction. Also in those work, the environment wasn't well defined like walls food, but rather other structures/creatures.

Other studies, taking inspiration from biological regrowth in some animals and morphogenesis, focused on the recovery from Deformation/ damage , applying Cellular automata to build and regenerate damaged parts. But none of them use the CA rule to move the creature.

In Lenia which is a continous cellular automata, we can already observe sensorimotor capabilities(see section Lenia). However, even if there are interaction between some entity, there is no well defined environment. Moreover the search for new creature in Lenia was first done manually testing parameters and mutating it or with simple evolutionary algorithm, which might take time and luck to find the type of creature we really expect. Other studies focused on exploring as much as possible the space of morphology in Lenia using intrinsically motivated explorer . However this last techniques didn't focused much on finding moving creature.

Finding moving creatures in Cellular automata differ from most classic RL experiment which evolve a body and train a separate controler as in cellular automata the body and its update rule are the controler.

Define things

In this work, we chose to add walls in Lenia to study the sensorimotor behavior of a self organizing agent. We propose a method based on gradient descent to learn the CA update rule leading to robustness and sensorimotor capabilities with walls. The creature we obtain, from the deformation induced by the walls on some part of it make new deicision at the macro level on where to go/how to react. What's even more interesting is that the computation made for the decision are all made in the creature itself, at the morphology level. In this study, to characterize creatures as creature with sensorimotor capabilities, we look if this creature can pass some basic test of robustness to a diversity of obstacles.

The system

Formulas

Lenia

The cellular automaton we will study in this work is Lenia . Lenia is a system of continous cellular automata where a wide variety of complex behavior has already been osberved, including what looks like sensorimotor-capabilities. In this work i will use the multi channel, multi kernel version of Lenia but for simplicity we will only use 1 channel for the creature and other ones for the environment.

A Lenia system like all CA starts from an initial pattern and iteratively update every pixel based on its neihgbours. The CA rule is given by the kernels and associated growth map which are both parametrized. So to find creatures we need to find both interesting CA rules and initialization. This is different from the game of life where the CA rule is fixed and initialization pattern are searched. Finding interesting rules from random exploration can be hard, especially in higher dimensions or when the number of kernels is big. This motivates our choice for gradient based method.

A wide variety of patterns has been found in Lenia, using hand made exploration/mutation and evolutionary algorithm or exploratory algorithm . However, as exploration algorithm studies focused on exploration of the morpholgy space and as moving creature are hard to find, these studies didn't found much of moving creatures. The other studies focused a lot on spatially localized pattern and especially moving one. You can find a library of creatures (especially moving one) found in the first version of Lenia () at this link.

The moving creature found are long term stable and can have interesting interactions with each other but some as the orbium (which you can find below) are not very robust for example here with collision between each other, or with walls (see next section). TO be fair, they're quite simple with only 1 kernel for the update rule.

Videos from Bert Chan's twitter : https://twitter.com/BertChakovsky/status/1219332395456815104 https://twitter.com/BertChakovsky/status/1265861527829004288

Other more complex creatures with multiple kernels seem to resist better to collision and seem to be able to sense the other creatures. These creatures show senorimotor capabilities as they change direction in response to interaction to other creatures. However, these other creatures that form the environment of the creature are in the same channel as the creature itself. Therefore they can be sensed through the kernel at higher distance. (explaining the large gap in the left video)

Walls

Our work, contrary to previous work on Lenia and most cellular automata, clearly separates what belongs to the environment and what does not. We also hand craft and fix the environment rule to define properly the environment we want. In this work we'll focus on walls in the environment as navigating between obstacle requires sensorimotor capabilites.

To implement walls in Lenia we added a separate walls channels with a kernel from the wall channel to the creature channel. This kernel have a huge negative growth where there are walls and no impact on other pixels where there are no walls (very localized kernel). This way we prevent any growth in the pixels where there are walls. This is similar to where they put antibiotic zone as obstacle where the bacteria can't live. The creature can only sense the wall through the changes/deformations it implies on the creature.In fact, the only kernel that goes from the wall channel to the creature is the fixed kernel that we impose. And as this kernel is localized, the creature has to "touch" the wall to sense it. To be precise the creature can only know that there is a wall because it won't be able to grow in these area which will perturb the creature shape locally.(And because of the cellular automaton nature of the creature the information has to be transmitted to other cells.)

Note that we used a kernel for the walls so that the system stays under the Lenia paradigm using local kernels only for the updates.

In this study, the creature can't have any impact on the walls. This differs from other studies such as in the game of life where the creature also perturb it's environment.

As said before, glider type of creature has been found in 1 channel lenia. However, they're not very robust to walls as shown bellow.

The multi channel creature(bottom left) dies from special collision with the wall. The multi kernel one is able to sense the wall and resist to perturbation but only if we slow time so that it can make more small updates. Also its movements are a kind of erratic.

Differentiable Lenia

Now that the environment is defined, we want to learn both the initialization and the CA rules leading to interesting behaviors. All parameters of the CA rule will be optimized, as well as the initialization which will be a square of fixed size.( each pixel will have its value optimized)

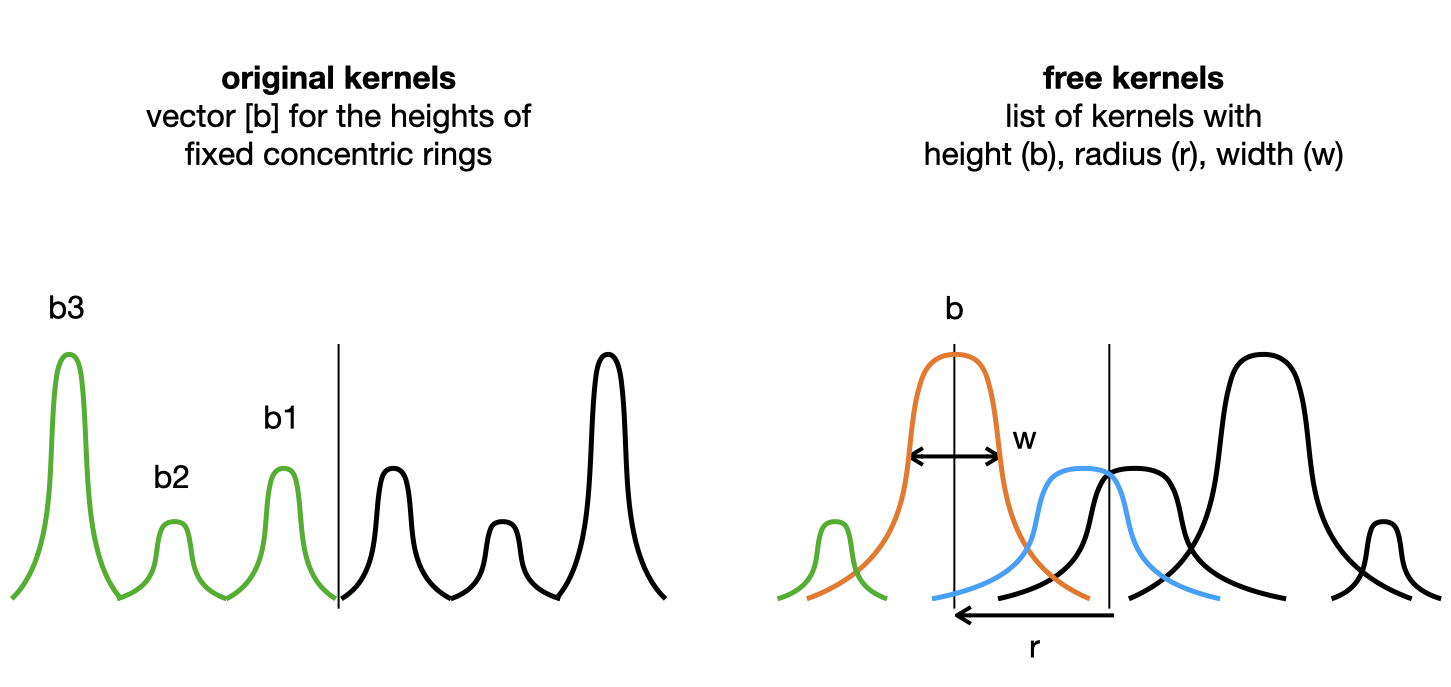

To learn these parameters we chose to use gradient descent method. Thus we tried to make Lenia as Differentiable friendly as possible. To do so, the main shift is to use "free kernels", using kernels in the form of a sum of n overlapping gaussian bumps: $$x \rightarrow \sum_i^{n} b_i exp(-\frac{(x-rk_i)^2}{2w_i^2}) $$ The parameters are then 3 n dimensional vectors: b for height of the bump, w for the size of the bump and rk for the center of the bump.

We did this shift because in the vanilla version of Lenia, the shape of the kernel was only given by a vector b of arbitrary size (but often max size 4). The number of bumps was given by the number of coefficient in b>0. However, the fact that the number of bumps depends on the number of coefficient > 0 prevents proper differentiation.

(if a coefficient is at 0 then it won't change with gradient descent as it doesn't play a role, and if a coefficient is >0 a gradient step can put it <0 which will make a strong unexpected change). We could have left the number of bumps to an arbitrary value like 3, and only optimizing the height such as they stay >0 but this would have been a strong limitation on the shape. The "free kernels", in addition to differentiation, allow more flexibility than the vanilla bumps but at the cost of more parameters.

However even doing so, differentiating through Lenia can be difficult because we often have a big number of iterations and each iteration has it's result clipped between 0 and 1. We should thus limit ourselves to few iterations when training.

Can we learn Moving Creature in Lenia ?

Before trying to find sensorimotor capabilities in our system a first step would be to find moving creatures like glider in the game of life. Note that moving creature in cellular automaton differ from other type of movement like muscle contraction or soft robot (article) by the fact that moving is growing at the front and dying at the back. This should imply that creatures that move are more fragile because they are in a fragile equilibrium between growth (to move forward) and dying (because otherwise we would have infinite growth).

In this paper, we learn to develop morphology and motricity at the same time. The CA rule will both be applied to grow the creature from an initial state and be the "physic" that makes it move.

Target image with MSE error seems effective to learn CA rule leading to certain pattern . And the fact that it's a very informative loss, thus helping with vanishing gradient problem made us choose this loss for our problem over other losses such as maximizing the coordinate of the center of mass . The first target shape we tried was a single disk. However after seeing that robust creature obtained seemed to have a "core" and a shallow envelopp, we informally chose to move to two superposed disk, a large shallow one with a thick smaller one on top. The target shape has the formula: \(0.1*(R<1)+0.8*(R<0.5)\). We chose on purpose to have the sum to be smaller than 1 because as we clip to 1 the pixels after each update it/s better to have pixel below 1 than pixel saturated if you want to have gradients.

However simply putting a target shape far from initialization and optimize towards it does not work most of the times. In fact, it works only when the target is not too far (overlap a little bit) from where the creature ended before optimization (so after the random initialization) . This comes from the fact that applying a lot of steps, each clipped, prevents gradient from flowing. The solution we propose to go further than this is curriculum learning.

IMGEP and Utility of curriculum

In fact, once we obtain a creature able to go a little further than the initialisation, we can push the target a little bit and learn to attain it. This time the new target needs to overlap where the creature is able to go after the first optimisation. Then we just need to iterate this process.

The effectiveness of curriculum with complex task has already been shown in where they made an agent design increasingly complex tasks trying too make it both not too hard and not too easy for an other learning agent. And in complex self organizing systems in where they needed to first make it learn easy computation in order to stabilize it.

One modular way we introduced it was using IMGEP which has already been used as an explorative tool in Lenia to explore the morphological space .

The general idea of IMGEP is to iteratively set new goals to achieve and for each of these goals try to learn a policy (here a policy is simply an initial state and the CA rule) that would suit this goal. THis way an IMGEP needs an interesting way to sample new goal for example based on intrinsic reward. It also needs a way to track the progress on this goal, and a way to optimize toward this goal. It also might use the knowledge acquired on other goals to learn new goals or attain them more quickly.

Lenia Step (hover over gray area to show step by step)

In our case, the goal space will simply be a 2 dimensional vector representing the position of the center of mass of the creature at last timestep. The way we sample the goals depends on the task but to have a moving creature that goes far in the grid, we will randomly sample position in the grid biasing the sampling toward one edge of the grid. we use MSE error between the last state and our target shape centered at the target goal to try to achieve this goal. The way we reuse knowledge acquired is by intializing the parameters by the one that achieved the closest goal.

The overall method can be summarized as such:

Initialize randomly the history (sampling random parameters)

loop (number of Imgep step)

sample target position/goal

Select the policy that achieved the closest position/goal

loop (number of optimisation steps)

Run lenia

Gradient descent toward the disk at target position

See what is the position(ie goal) achieved

Can we learn robust creatures with sensorimotor capabilities ?

Initialization is the yellow square Green dashed line is at the same position in both.;

Left: reached goal/position library in green, target goal in red. THe policy selected is the one reaching the position in purple circle

Right: Blue disks are the obstacles, red the target shape and green the creature at last timestep before optimisation .

Now that we have an algorithm that is capable of learning moving creature in Lenia. the next step is to find a way to learn a creature that would resist and avoid obstacles in its environment, using the deformations the environment induces on it to sense . In this section, we'll try from scratch to learn a single CA rule and initialization that lead to building, moving and regenerating creature. So we will learn a single global rule for multiple functions contrary to which separates regenerating and building into two different CA.

What we want to obtain is a creature that is able to generalize to different obstacles. To do so we will train the creatures using the method from previous section but adding randomly generated obstacles. This way our gradient descent will be stochastic gradient descent with the stochasticity coming from the sampling of the obstacles. The learning process will thus encounter a lot of different configurations and may find general behavior. In practice, we will only put obstacles in half the lattice grid. This way, there will be half of the grid free from obstacle where we will first learn a creature that is able to move without any perturbation as in previous section and then as we push the target further and further the creature will start to encounter obstacles. And the deeper the target position is, the more it will encounter obstacles and so the more it should be robust. In fact at the beginning you will just be a little perturbed by one obstacle and the target circle will optimize the creature to get past the obstacle and recover. (scheme) Then if you want the creature to go further it will have to encounter more obstacles and still be able to resist the second one even if the first one perturbed it. So the curriculum is made by going further and further because the further you go the more you will have to resist to obstacles. See appendix for more details on the obstacles.

In the IMGEP, to take into account the fact that the position attained depends on the obstacle configuration, the reached goal will be an average of the position attained on different configurations of obstacles.

Initialize randomly the history (sampling random parameters)

loop (number of Imgep step)

sample target position/goal

Select the policy that achieved the closest position/goal

loop (number of optimisation steps)

Sample random obstacles

Run lenia

Gradient descent toward the disk at target position

See what is the position(ie goal) achieved (mean over several random obstacles runs)

Overcoming "bad initialization" problem

The arrows show the dependencies between the creatures, the back of the arrow is the creature which initializes the optimization and the head of the arrow is the creature obtained after optimization. The arrows are only to show how much initialization and first optimisation steps mattter but dependencies is not used.

Note that the random initialization of the history using random paramaters and the first steps have a huge impact on the performance of the method. Because it will be the basis on which most of the next optimization will be made.In fact, we will select one random initialization, and do the first optimization step on top of it. And as it will lead to a creature that goes a little bit further, when we'll sample new goal we will most of the time select this creature as the basis for the new optimization. Which will lead to a creature going further which in consequence will also be sampled after. And so in most of the runs, most of the creatures will be based more or less closely on the random initialization selected and also the first steps. However, if the initialization is "bad" or the first IMGEP steps, on which the nexts will be based, go in the "wrong" optimisation direction, optimization problems can arise.

While training with this algorithm sometimes the optimisation could not get creature getting passed the obstacles, and would diverge to exploding or dying creature. This can be mitigated by adding random mutations before optimizing that could lead to better optimization spots by luck. It may unstuck the situation however the creature after mutation are often not that good and most of the time far from the previously achieved goal (because mutation often make "suboptimal" creature that may be slower than the one before mutation) which prevent learning. So mutation can help unstuck situation but also slows the training. This is why we apply less optimization steps for the mutated one, see appendix for more details.

This does not solve the problem 100% of the time and that's why we also apply initialization selection. We run the first steps of the methode (random initialization and optimization), until we find an initialization which gives a good loss for the 3 first deterministic target (placed before the obstacles). Because the first steps will be the basis of most of the creatures and so if it struggle to make a moving creature, it will be hard for it to learn the senorimotor capabilities on top .This way we only keep the initializations and first steps that learned quickly and seemed to have room for improvement.

Results

In this section, we will test the creature to explore its sensorimotor capabilities and robustness. We will also test the creatures obtained in situation that it has not encounterd during training.

How well do the creatures obtained generalize ?

are the creature long term stable ?

Even if we can not know if the creature is indefinitively stable, we can test for reasonable number of timesteps. The result is that the creature obtained with IMGEP with obstacles seems stable for 1750 timesteps while it has only been trained to be stable for 50 timesteps. This might be because as it learned to be robust to deformation it has learned a strong preservation of the structure to prevent any explosion or dying when perturbed a little bit. And so when there is no perturbation this layer of "security" strongly preserves the structure. However, Training a creature only for movement(without obstacles so no perturbation during training) sometimes led to non long term stable creatures. This is similar to what has been observed in where training to grow a creature from the same initialization (a pixel) led to pattern that were not long term stable. But adding incomplete/perturbed patterns as initialization to learn to recover from them led to long term stability. (by making the target shape a stronger attractor)

Are the creatures robust to new obstacles ?

The resulting creatures are very robust to walls perturbations and able to navigate in difficult environment. The resulting creature seems able to recover from perturbation induced by various shape of wall including vertical walls.(see interactive demo) One very surprising emerging behavior is that the creature is sometimes able to come out of dead end showing how well this technique generalizes. There are still some failure cases, with creature obtained that can get unstable after some perturbation, but the creatures are most of the time robust to a lot of different obstacles. The generalization is due to the large diversity of obstacles encountered by the creature during the learning because 8 circle randomly placed can lead to a very diverse set of obstacles. Moreover as it learns to go further, the creature have to learn to collide with several obstacles one after the other and so be able to recover fast but also still be able to resist/sense a second obstacle while not having fully recover.

Are the creatures robust to Moving obstacles ?

We can make an harder out of distribution environment by adding movement to the obstacles. For example we can do bullet like environment where the tiny wall disks are shifted of few pixels at every step. The creature seems quite resilient to this kind of perturbation even if we can see that a well placed perturbation can kill the creature. However, this kind of environment differs a lot of what the creature has been trained on and therefore shows how much the creature learned to quickly recover from perturbations, even unseen ones.

Are the creature robust to Asynchronous update with noise ?

As done in , we can relax the assumption of synchronous update (which assumes a global clock) by adding stochastic update. By applying a mutation mask on each cell which is on in average 50% of the time we get partial asynhcronous updates. The creature we obtained with the previous training with synchronous updates seem to behave "normally" with stochastic updates. The creature is slowed a little bit but this is what we can expect as each cell is updated in average 50% of the time.

Are the creature robust to change of scale ?

The grid is the same size as above to give you an idea of the scale change( kernel radius *0.4)

We can change the scale of the creature by changing the radius of the kernels as well as the size of the initialization square. This way we can make much smaller creature that therefore have less pixels to do the computation. This scale reduction has a limit but we can get pretty small creature. The creature stil seem to be quite robust and be able to sense and react to their environment while having less space to compute. The creature are even able to reproduce, however they seem to be less robust than the bigger one as we can see some dying from collision. We can also do it the other way and have much bigger creature that therefore have more space to compute.

Are the creatures robust to change of initialization ?

While we didn't put any attention to initialization robustness and the creature initialization has been learned with a lot of degree of liberty, we can look if the same creature can emerge from other (maybe simpler) initialization. This would show how prone the CA rule learned is to grow the shape and maintain it. As the creature learned to recover from perturbed morphology, we can expect the shape to be a strong attractor thus letting more liberty on the initialization. In fact, what we find in practice is that the creature can emerge from other initialization, especially as shown here circle with a gradient. Bigger initializations also lead to multiple creature forming and seprating from each other (see next section for more about individuality). However the robustness to initialization is far from being perfect as other initializations easily lead to death, like for example here a circle of inapropriate size.

Multi creature setting

By adding more initialization square in the grid, we can add several creature with the same update rule letting us observe multicreature . As pointed out in , other entity are also part of the environment for the creature and can give rise to nice interactions. Maturana and Varela even refer to this kind of interaction as communication. Note that the creature never encountered any other creature during its training and was always alone.

Individuality

The creature obtained shows strong individuality preservation. In fact, creatures goes in non destructive interactions most of the times without merging. As said before, we can tune the weight of the kernels (especially the limiting growth one) to make the merge of two creature harder. By increasing those limiting growth kernels, the repeal of two entities get stronger and they will simply change direction. (Individuality has also been observed in the "orbium" creature in Lneia for exemple but much more fragile, a lot of collision led to destruction or explosion)

Attraction

If they are too far from each other no attraction.

One other type of interaction between two creature of the same species(governed by the same update rule/physic) is creature getting stuck together. The two creatures seem to attract each other a little bit when they are close enough leading to the two creature stuck together going in the same direction. When they encounter an obstacle and separate briefly, their attraction reassemble them together. Even when they're stuck together, from a human point of view seeing this system, we ce can still see 2 distinct creatures. This type of behavior is studied in the game of life in with the notion of consensual domain.

Reproduction

Another interesting interaction we observed during colision was "reproduction". In fact for some collision, we could observe the birth of a 3rd entity. This kind of interaction seemed to happen when one of the two entity colliding was in a certain "mode" like when it just hit a wall. Our intuition is that when it hits a wall, it has to have a growth response in order to recover. And during this growth response if we add some perturbation of another entity it might separate this growth from the entity and then this separated mass from strong self-organization grows into a complete individual.

Still failure cases

Even with the initialization selection and small mutations, sometimes the algorithm doesn't seem able to learn to go past obstacles and we can't reach goal position beyond a certain limit near the beginning of walls.

The main creature used in most of the demo/test above is very robust but with different configuration it's possible to kill or explode others creature. However they were only trained for 50 timesteps (2seconds in the clips above) and witth always 8 obstacle of the same type (even if their position induced diversity). Further training of the parameters for more robustness should be achievable.

In the Multi creature case, we can have death and explosion but it has not been trained for that.

Classic CA cognition

Neural CA

Soft robots

Work has focused on designing the morphology of soft robots (articles ) using cellular automata. Those soft robots have a separate controler/ motor which is either automatic( ) controller using feedback of env () shapeshift to recover from injury ( )

Swarm robotic

We can draw parallels with swarm robotic which dictates how several agent should arange themselves in "harmony"

Exploration

Other works have been focusing on exploring as much as possible the system trying to find diversity in an open ended manneer.

Discussion

What's interesting in such system is that the computation of decision is done at the macro (group) level, showing how a group of simple identical entities through local interactions can make "decision", sense at the macro scale. Seeing these creature it's even hard to believe that they are in fact made of tiny part all behaving under the same rules. Moreover the creatures presented here are all 1 channels creature, there is no hidden channel where some value could be stored.

In order to navigate, the creature first need to sense the wall through a deformation of macro creature . Then after this deformation it has to make a collective "decision" on where to grow next and then move and regrow it's shape. We can even do the deformation ourselves by suppressing a part of the creature. It's not clear looking at the kernels activity which ones are responsible for these decision if not all. How the decision is made remains a mystery. Moreover some cells don't even see the deformation because they're too far so some messages from the one that see have to be transmitted.

Maybe each kernel has its own purpose, some may be responsible for growth, some for detection of deformation, some for decision. If it's the case, we could even see those creature as modular adding new functionalities by adding new kernels. However as there is a quite strict equilibrium between kernel, i doubt that we could simply plug new kernels without disturbing this equilibrium.

One major difference between the neural CA model used in and Lenia is that the radius of the neighbourhood of each cell that we used is quite big. In fact, in they use a Moore neighbourhood (direct neighbours and diagonal) while we use kernel of large size :radius 28 or so during training (but that's an arbitrary choice, even if large radius helps to train fast as creature overlap more easily and from larger distance with the target shape and so we can make larger curriculum steps, having larger target area may also help the MSE optimization ) and we can downscale our creature to have a radius of approximatively 6.

Maybe using message passing like model we can get something equivalent with moore neighbourhood. One interesting experiment would be to try to fit a Neural CA on the creature obtained here and see if it can make approximatively the same creature with moore neighbourhood. We also think that hidden channel could help in this case maybe by storing some value. One other difference from is that our kernels are totally symetrical while theirs have the notion of up and down, right and left which might be helpful if we want the creature to have a prefered direction and know in which direction it is pointing.

Surprisingly some creature looked a lot like (in term of morphology) creature from which were obtained by evolutionary algorithm or by hand made mutations but ours seem to be far more robust.

Even if some basic creature with more or less good level of sensori motor capabilities have already been found by random search and basic evolutionary algorithm in Lenia. This work still provides a method able to easily learn the update rule, from scratch in high dimensional parameters space, leading to different robust creature with sensorimotor capabilities. We also think that the ideas presented here can be useful to learn parameters in other complex systems that can be very sensitive. Especially, using curriculum learning which seems to help a lot.

We focused a lot on the responsive action in this paper and the robustness. But studying how the creature spot a deformation might be interesting to understand how our body knows when the growth isn't over. Studying how the computation of "decision" is made at a higher level (and scale) than just the CA rule (for exemple when encountering a wall) is a very interesting next step.

One other interesting direction is to add even more constraints in the environment like food/energy for example. We tried a little bit to add food in Lenia but we're not satisfied with the result. We think that adding food to the environment might be a great leap toward the search of more advanced behavior in Lenia. For example adding competition between individual/species for food. From this competition and new constraints, interesting strategies could emerge as in